Amazon Web Services (AWS) just announced a new Application Load Balancer (ALB) service.

I spent some time playing with the new service to understand what it offers and to see how it fits into our cloud architecture.

In summary, ALB is a massive improvement over ELB in almost every way.

For $16/month, a single ALB can serve HTTP, HTTP/2 and websockets to up to 10 microservice backends. Now the hardest problems of monitoring and routing traffic containers, which are constantly moving around due to ECS container orchestration, is solved by configuring one ALB correctly.

We no longer need multiple ELBs or internal service discovery software in our microservice application stack to get traffic to our containers. We also no longer need hacks for websockets and HTTP referrers.

ALB has exactly what ECS needs to make our architectures even better.

I encourage you to also check out my EFS experiments and the rest of the Convox Blog for more hand-on coverage of the newest generation of AWS services.

If our application is running entirely on a single server total downtime is a guarantee.

This server is a “Single Point Of Failure” (SPOF), and we can be certain that someday it will fail due to hardware or network problems, taking our app completely offline.

Simple but guaranteed to fail

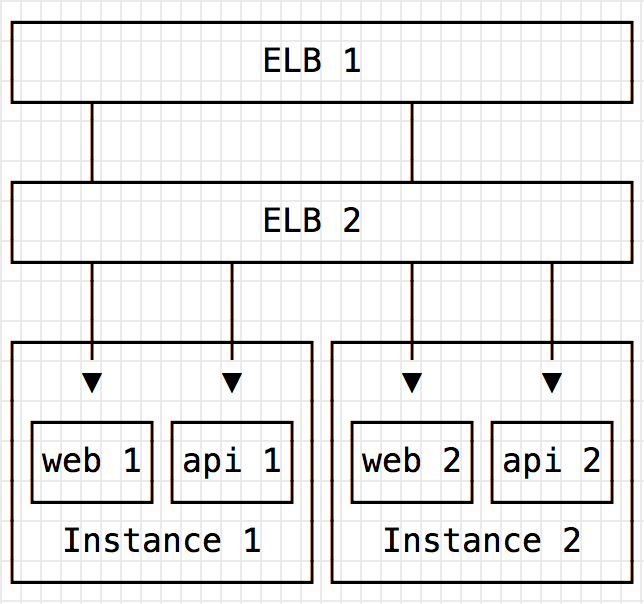

Since we want our app to be online forever we need a more sophisticated architecture. We need to add a second server and a load balancer. Now when the first instance fails, the second one will still serve the app while automation replaces the failed instance.

Instance 1 can fail but the Load Balancer and Instance 2 keep going

It looks simple enough, but we’ve moved the hard problems to the load balancer itself. It needs

These are exactly the tough problems we need a service provider like Amazon to handle so we don’t have to.

In 2009 AWS launched the Elastic Load Balancing (ELB) service. From that day forward, ELB has been a mission critical component for serious services running on AWS.

Load Balancing to Instances is a solved problem.

We’re never content as a industry…

Many of us are trying to do better than running a single service on a single instance. We’re trying to squeeze more efficiency out of those instances and treat them as a cluster with many services together.

Microservices need mega architecture

To do this with ELB we’re faced with some new architectural challenges.

If every service class needs its own ELB we will need lots of them. ELBs cost $18/month minimum, so the cost can really add up.

We can try to use a single ELB and manage the routing with software. Plenty of open source software like nginx, HAProxy, Consul, Kong, Kubernetes and Docker Swarm help to discover and proxy traffic to microservices and containers.

But going down this path means I’m again responsible for managing complex software. I want to delegate these tough problems to cloud services.

Conceptually ALB has a lot in common with ELB. It’s a static endpoint that’s always up and will balance traffic based on it’s knowledge of healthy hosts.

But ALB introduces two new key concepts: content-based routing and target groups.

1 Load Balancer, 2 Instances, 3 microservices!

We now have a single ALB that is configured to:

And now we only need 1 ALB!

Out of the box ALB does all the things we need for a modern microservice application:

And best of all there are cost savings. The base cost of an ALB is $0.0225 per hour, approximately $16 per month. This supports 10 different backends.

On top of that is metered data charges. If the ALB is handling new connections, active connections and/or serving traffic we are charged in multiples of $0.008 per hour. It'll take a while to see how the new Load Balancer Capacity Units (LCU) concept works in production, but AWS promises cost savings over virtually any ELB usage.

This is a much, much better starting point than those 3 x $18/month ELBs (plus $0.008 per GB of traffic) we were paying before.

The AWS announcement offers a tutorial for setting up some services manually.

However, we can use Convox, the open-source AWS toolkit for a much faster path to test out ALB on top of a production-ready stack that uses CloudFormation to set up the VPC, AutoScaling Groups, ECS and ALB correctly.

With this Convox branch we can take a simple manifest describing our web and API containers and routing paths:

web:

image: nginx

ports:

- 80:80

labels:

- convox.router.path=/*

api:

image: httpd

ports:

- 80:80

labels:

- convox.router.path=/api/*

And deploy it with a couple commands:

$ convox install --version 20160813173628-alb

$ convox deploy

$ convox apps info

Name micro

Status running

Release RVNEQBOZWQD

Processes api web

Endpoints http://alb-micro-1883074723.us-west-2.elb.amazonaws.com/

And see that Apache is serving stuff under the /api/* path, and nginx is serving everything else!

$ curl http://alb-micro-1883074723.us-west-2.elb.amazonaws.com/

<title>Welcome to nginx!</title>

$ curl http://alb-micro-1883074723.us-west-2.elb.amazonaws.com/api

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.11.1</center>

$ curl http://alb-micro-1883074723.us-west-2.elb.amazonaws.com/api/

<html><body><h1>It works!</h1></body></html>

See the Getting Started With Convox guide and join the Convox Slack for more help testing out ALBs with Convox.

ELB and EC2 has dominated the cloud for the past 7 years. ALB looks like a massive improvement over ELB in every way.

It greatly simplifies our production stack, removing the need for many ELBs or complex microservice discovery software. It natively supports HTTP, HTTP/2 and websockets removing the need for TCP and keep alive hacks.

And it’s significantly cheaper for any real microservice app.

It’s clear that ALB and ECS are the newest and best way to use AWS. With these services the big challenges of running containers are Amazon’s responsibility.

I’ll certainly be adopting ALB for my production services over time.

Noah is the CTO of Convox and previous Platform Architect at Heroku. He’s been supporting production services at scale on AWS since the early days of EC2 and S3. Follow @nzoschke on twitter.